Unchecked employee use of consumer AI tools creates an active internal risk, with sensitive data flowing out of organizations without visibility or default controls.

Martin Astley, CISO at Astley Digital Group, describes how AI providers leave CISOs blind, forcing companies to manage risk without transparency or built-in guardrails.

Leaders must audit real AI usage, reset trust assumptions, and push providers to make security and visibility standard rather than expensive add-ons.

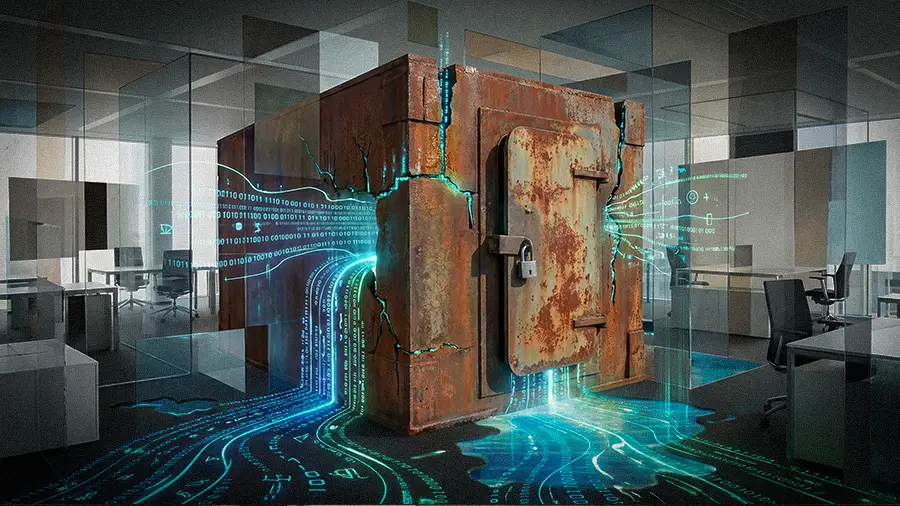

For most enterprises, the most serious AI risk is already inside the building. While executive leadership focuses on the promise of productivity, employees are quietly feeding sensitive data into consumer AI tools, often without realizing the exposure they’re creating. The problem is compounded by what many see as a failure from AI providers to build basic security guardrails into their platforms, leaving their enterprise customers to manage the risk.

That tension sits squarely in the day-to-day reality of Martin Astley, Chief Information Security Officer at 24|7 Home Rescue and Astley Digital Group, with more than a decade spent operating at the intersection of IT, security, and risk. Having uncovered widespread, unmanaged AI use inside his own organization, Astley is blunt about where the responsibility lies. In his view, the current security gap isn’t a failure of awareness or tooling inside enterprises, but a provider problem.

"Right now there’s zero visibility into what’s going on, even with the premium AI licenses. We can’t see what our staff are doing, and we can’t put basic guardrails in place," says Astley. For him, the issue became clear upon discovering that AI had flooded his organization, with employees uploading reports and data long before any official policy was in place.

Silent but constant: It's a risk that spreads quickly and quietly, with very few controls in place to mitigate that risk by default. "AI misuse has been happening silently and constantly ever since it was launched. People just use it, and they don’t think twice about what they’re uploading," he says.

F for effort: Astley points to a distorted economic reality where security becomes an afterthought rather than a built-in responsibility. Companies are forced to buy expensive overlay tools that monitor AI usage, often at several times the cost of the AI itself, simply to regain basic control. "Securing the tool is more expensive than the tool itself. It shouldn’t be like that," he says. In his view, the fix isn’t complex. "These AI companies are pouring all their energy into better speeds and bigger scales. They’re just not focused on security. A small amount of effort would make a big difference."

An insider in the machine: But provider negligence is only half the story. The other half is psychological. Astley says that users lower their guard and ignore even well-documented technical risks, like an AI model being compromised by a poisoned document. For Astley, the result is a critical cultural blind spot that security leaders must address. "By having ChatGPT, you’ve essentially got an insider within your business. Would you tell a new hire all of your company secrets? People feel comfortable giving that same sensitive data to an AI agent without a second thought."

The disconnect leaves many security leaders navigating a chaotic "plate of spaghetti" of fragmented governance. In this regulatory vacuum, leaders are largely on their own, working with emerging standards like the NIST AI Risk Management Framework in the absence of universal rules. Astley suggests that change requires applying pressure on providers now, rather than waiting for an incident that will force their hand.

Pressure or piper: "Change will come from leaders calling them out and making it clear this is what we need. It's either that, or it's going to take a major incident that catches media attention and forces these providers to finally act. That's what it will take."

The privacy trade-off: Astley applies the same logic to emerging tools like agentic browsers, acknowledging their power alongside the fundamental privacy trade-off. "Agentic browsers are like having somebody looking over your shoulder all the time. That technology is constantly watching every move you make, and the fundamental privacy concern is that we don't know where that data is going."

So what should leaders do? Astley urges them to move past assumptions and confront the reality of AI use within their own walls with a series of pointed questions. "Leaders need to ask themselves: Do you know what your employees are using ChatGPT for? How secure do you feel about the data being published into it? And what guardrails do you have in place right now? Because you probably don't have any."

For Astley, the checklist points to a larger change in thinking toward an active discipline of continuous mastery over the passive acquisition of technology. "Many organizations spend a lot of money on tooling, but then they leave it to gather dust, adopting a false sense of security that the tool will take care of all their problems," he concludes. "But security is a continuous cycle of improvement, not a purchase you can set and forget."